researchers conducted experimental surveys with more than 1,000 adults in the U.S. to evaluate the relationship between AI disclosure and consumer behavior

The findings consistently showed products described as using artificial intelligence were less popular

“When AI is mentioned, it tends to lower emotional trust, which in turn decreases purchase intentions,”

Seems like people are getting tired of AI.

If a product says “AI” on it, and its not talking about NPCs in a videogame, i assume the product is shitty and took 30x the energy to produce than a human-made product of the same quality

Well that’s true. I was looking at a Zojirushi rice cooker

One of its selling point is: ‘Advanced fuzzy logic technology with AI (Artificial Intelligence) “learns” and adjusts the cooking cycle to get perfect results’

I immediately said no thanks and looked for another model without that, and probably cheaper. It’s a rice cooker FFS!

How does it determine better results? oO

That’s the problem with most marketing. Unspecific, raising questions rather than answering them. Being vague and only positive-formulated rather than presenting information.

I mean, they wrote “learn” with quotation marks so… 🤷🏻♂️

Well at least that’s more honest than many many “researchers”.

- Advanced fuzzy logic technology with AI (Artificial Intelligence) “learns” and adjusts the cooking cycle to get perfect results

- Superior induction heating (IH) technology generates high heat and makes fine heat adjustments resulting in fluffier rice

- “My Rice (49 Ways)” menu setting – Just input how the rice turned out, the rice cooker will make small changes to the cooking flow until it gets to the way you like it

Based on the description the so-called “AI” simply adjusts time based on user feedback. That would be hilarious if not so sad as a marketing device.

So after it’s done you can adjust it’s cooking time, but instead of a cook time knob that you turn they try to pretend it’s AI?

Pretty much. But instead of adjusting it like “cook it for less/more time”, you say “it’s raw/mushy”. Or at least that what I think, based on the product info, but I might be wrong.

And… yeah, it’s all pretend. Just like “smart” some years ago.

It probably has the twin benefits of needing a crummy smartphone app and an internet connection though. Knobs lack these delights.

What a wonderful user experience! No rice is fine if connection is down, right?

But zojirushi is a legitimately good brand and I’d apply an exception for them unless they became enshittified

I know that’s why I’m staying with that brand. I am just looking for another model, I probably don’t need all the fuss features of the top of the line model.

Advanced fuzzy logic technology with AI (Artificial Intelligence) “learns" and adjusts…

I swear there was a dishwasher or something in either Sims 1 or 2 that damn near this exact description.

For what it’s worth, they’ve had a “Neuro Fuzzy” rice cooker (https://www.zojirushi.com/app/product/nszcc) for years—ours is at least 10 years old at this point. And, I would bet this is a trivial extension of that—using some decision tables supplemented with heat feedback—with only the addition of a user feedback mechanism, rather than any, true “AI”.

negative response to AI disclosure was even stronger for “high-risk” products and services, […] such as expensive electronics, medical devices or financial services. Because failure carries more potential risk, […] mentioning AI for these types of descriptions may make consumers more wary […]

That sounds like a rational reaction.

There’s a lot of hand waving when companies talk about AI safety. I would be more likely pay for a product with some AI if marketing promote its effectiveness without highlighting AI, than if they mentioned AI with vague assurance about safety.

I would be more worried about that fact that the AI enabled device likely needs an internet connection to function. That means the manufacturer can take away features or brick the device whenever they want to.

That’s true. “AI-enabled” is usually a hint of over engineering and unnecessary collection of data.

AI-enabled is the new “smart” bullshit. I wonder what the next buzzword will be.

Just because it’s something we already think is obvious, doesn’t mean it’s a bad thing that actual studies are being done to prove it!

True dat.

“AI” went automatically to the list of so-called features that will make me drop a product like it’s on fire:

❌ Uses “artificial intelligence”

❌ Needs an internet connection (barring actual computers)

❌ Always-on microphone/camera

❌ Phones home to manufacturer’s or third party servers

❌ You buy the hardware, we lease you the software

❌ Fire hazard

❌ Toxicity and/or radiation

❌ Exposed wiring

❌ “Spring surprise” chocolate variant

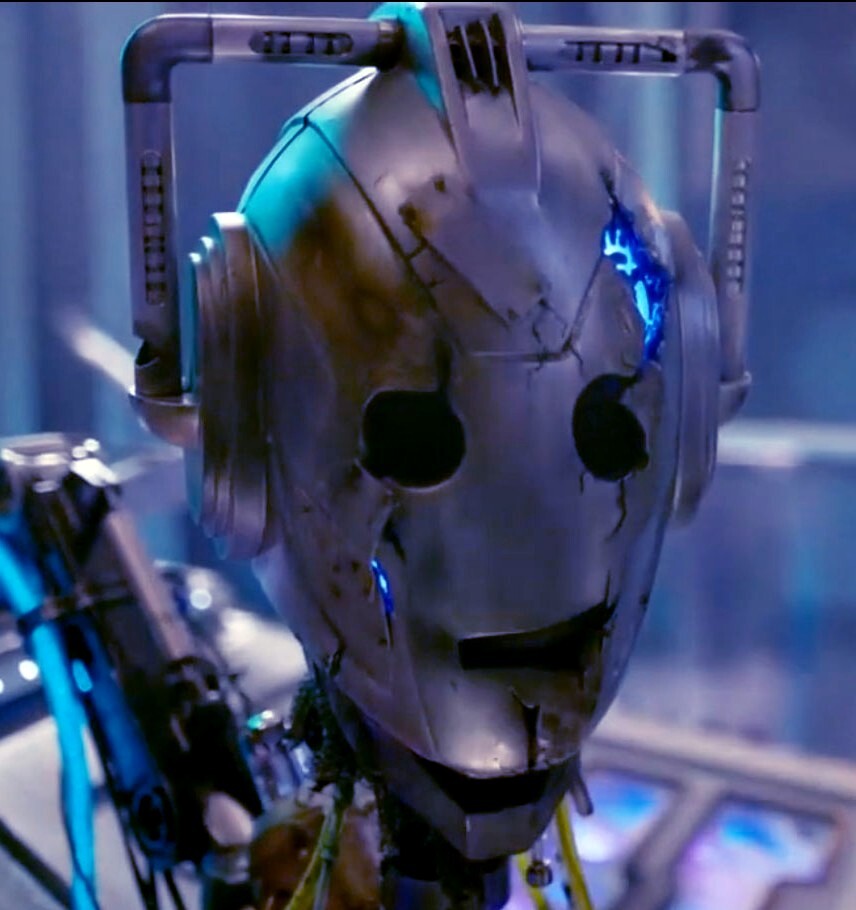

❌ Actual killer bot

Computers don’t generay “need” an Internet connection.

Funny, radiation and killer bot are 2 of my top features.

Is that you, Doctor Doom?

is your house just an oven and a light switch?

every netsec admin i know lives like that and is DISGUSTED at how many “smart” appliances i have

I have a fully furnished kitchen and apartment, several well-stocked bookcases and more light switches than I care to use. You will be shocked to learn that I own not a single smart appliance beyond my phone and computer. And I’m not even a netsec admin 🤷

Mentioning AI in marketing signals:

- Our management has no vision and chases fads.

- Once this fad passes you’ll be on your own as we move on to the next one.

- We think you are quite dumb and won’t see how vapid our marketing is.

- The product probably requires an internet connection and may not work without one.

- The product probably depends on a cloud service that could be withdrawn at any time.

- The product probably spies on you.

- We cheap out on things that would be better done by people paid to do them.

- We can’t think of anything more specific that distinguishes our product.

- You’re paying for features you don’t want or need.

- You’ll be at the mercy of our software updates, which at some point will stop coming and then who knows if the product will keep working.

- Even before we stop supporting it, the product will only work about half the time.

Also:

- Our product might be wrong sometimes, in those critical moments when the system encounters a new unseen problem not in its training dataset.

- Our system might output a lot of junk data by design or accident robbing your clients time

I have seen several "AI Bible app"s advertised. I don’t even want to ask what sort of concoction they’re making

They’re trying to praise the Machine God, little do they know the Omnissiah considers abominable intelligence a deadly sin.