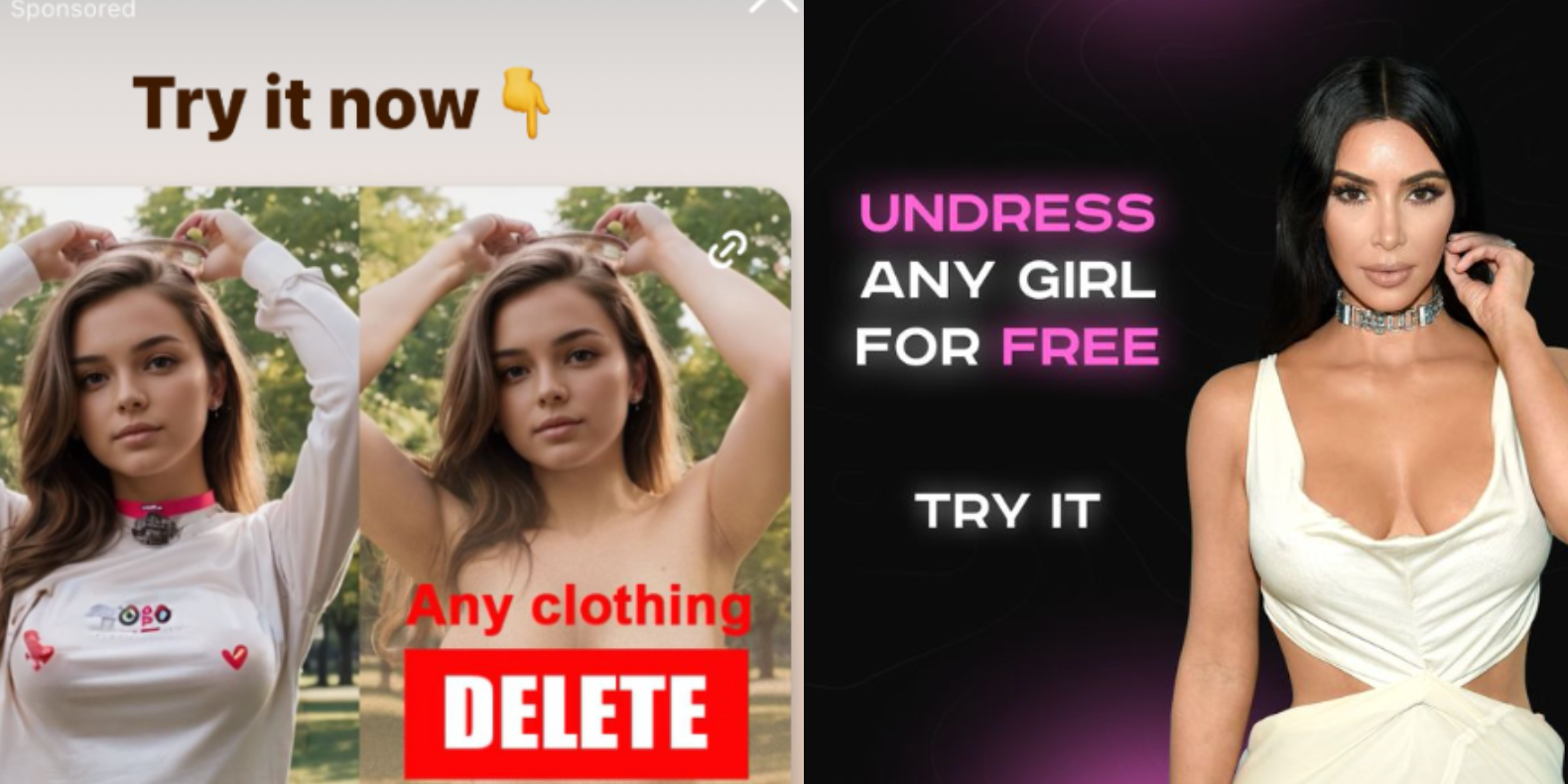

Instagram is profiting from several ads that invite people to create nonconsensual nude images with AI image generation apps, once again showing that some of the most harmful applications of AI tools are not hidden on the dark corners of the internet, but are actively promoted to users by social media companies unable or unwilling to enforce their policies about who can buy ads on their platforms.

While parent company Meta’s Ad Library, which archives ads on its platforms, who paid for them, and where and when they were posted, shows that the company has taken down several of these ads previously, many ads that explicitly invited users to create nudes and some ad buyers were up until I reached out to Meta for comment. Some of these ads were for the best known nonconsensual “undress” or “nudify” services on the internet.

Yet another example of multi billion dollar companies that don’t curate their content because it’s too hard and expensive. Well too bad maybe you only profit 46 billion instead of 55 billion. Boo hoo.

It’s not that it’s too expensive, it’s that they don’t care. They won’t do the right thing until and unless they are forced to, or it affects their bottom line.

An economic entity cannot care, I don’t understand how people expect them to. They are not human

Economic Entities aren’t robots, they’re collections of people engaged in the act of production, marketing, and distribution. If this ad/product exists, its because people made it exist deliberately.

No they are slaves to the entity.

They can be replaced

Everyone from top to bottom can be replaced

And will be unless they obey the machine’s will

It’s crazy talk to deny this fact because it feels wrong

It’s just the truth and yeah, it’s wrong

Everyone from top to bottom can be replaced

Once you enter the actual business sector and find out how much information is siloed or sequestered in the hands of a few power users, I think you’re going to be disappointed to discover this has never been true.

More than one business has failed because a key member of the team left, got an ill-conceived promotion, or died.

Well too bad maybe you only profit 46 billion instead of 55 billion.

I can’t possibly imagine this quality of clickbait is bringing in $9B annually.

Maybe I’m wrong. But this feels like the sort of thing a business does when its trying to juice the same lemon for the fourth or fifth time.

It remains fascinating to me how these apps are being responded to in society. I’d assume part of the point of seeing someone naked is to know what their bits look like, while these just extrapolate with averages (and likely, averages of glamor models). So we still dont know what these people actually look like naked.

And yet, people are still scorned and offended as if they were.

Technology is breaking our society, albeit in place where our culture was vulnerable to being broken.

I suspect it’s more affecting for younger people who don’t really think about the fact that in reality, no one has seen them naked. Probably traumatizing for them and logic doesn’t really apply in this situation.

Wtf are you even talking about? People should have the right to control if they are “approximated” as nude. You can wax poetic how it’s not nessecarily correct but that’s because you are ignoring the woman who did not consent to the process. Like, if I posted a nude then that’s on the internet forever. But now, any picture at all can be made nude and posted to the internet forever. You’re entirely removing consent from the equation you ass.

Totally get your frustration, but people have been imagining, drawing, and photoshopping people naked since forever. To me the problem is if they try and pass it off as real. If someone can draw photorealistic pieces and drew someone naked, we wouldn’t have the same reaction, right?

It takes years of pratice to draw photorealism, and days if not weeks to draw a particular piece. Which is absolutely not the same to any jackass with an net connection and 5 minutes to create a equally/more realistic version.

It’s really upsetting that this argument keeps getting brought up. Because while guys are being philosophical about how it’s therotically the same thing, women are experiencing real world harm and harassment from these services. Women get fired for having nudes, girls are being blackmailed and bullied with this shit.

But since it’s theoretically always been possible somehow churning through any woman you find on Instagram isn’t an issue.

Totally get your frustration

Do you? Since you aren’t threatened by this, yet another way for women to be harassed is just a fun little thought experiment.

Well that’s exactly the point from my perspective. It’s really shitty here in the stage of technology where people are falling victim to this. So I really understand people’s knee jerk reaction to throw on the brakes. But then we’ll stay here where women are being harassed and bullied with this kind of technology. The only paths forward, theoretically, are to remove it all together or to make it ubiquitous background noise. Removing it all together, in my opinion, is practically impossible.

So my point is that a picture from an unverified source can never be taken as truth. But we’re in a weird place technologically, where unfortunately it is. I think we’re finally reaching a point where we can break free of that. If someone sends me a nude with my face on it like, “Is this you?!!”. I’ll send them one back with their face like, “Is tHiS YoU?!??!”.

We’ll be in a place where we as a society cannot function taking everything we see on the internet as truth. Not only does this potentially solve the AI nude problem, It can solve the actual nude leaks / revenge porn, other forms of cyberbullying, and mass distribution of misinformation as a whole. The internet hasn’t been a reliable source of information since its inception. The problem is, up until now, its been just plausible enough that the gullible fall into believing it.

How dare that other person i don’t know and will never meet gain sexual stimulation!

My body is not inherently for your sexual simulation. Downloading my picture does not give you the right to turn it in to porn.

Did you miss what this post is about? In this scenario it’s literally not your body.

There is nothing stopping anyone from using it on my body. Seriously, get a fucking grip.

It’s all so incredibly gross. Using “AI” to undress someone you know is extremely fucked up. Please don’t do that.

Would it be any different if you learn how to sketch or photoshop and do it yourself?

This is also fucking creepy. Don’t do this.

But yet, we’ve (almost) all imagined someone we know naked.

deleted by creator

404 Media is worker owned; you should pay them.

Good, let all celebs come together and sue zuck into the ground

Its funny how many people leapt to the defense of Title V of the Telecommunications Act of 1996 Section 230 liability protection, as this helps shield social media firms from assuming liability for shit like this.

Sort of the Heads-I-Win / Tails-You-Lose nature of modern business-friendly legislation and courts.

Is there such a thing as a consensual undressing app? Seems redundant

I assume that’s what you’d call OnlyFans.

That said, the irony of these apps is that its not the nudity that’s the problem, strictly speaking. Its taking someone’s likeness and plastering it on a digital manikin. What social media has done has become the online equivalent of going through a girl’s trash to find an old comb, pulling the hair off, and putting it on a barbie doll that you then use to jerk/jill off.

What was the domain of 1980s perverts from comedies about awkward high schoolers has now become a commodity we’re supposed to treat as normal.

Idk how many people are viewing this as normal, I think most of us recognize all of this as being incredibly weird and creepy.

Idk how many people are viewing this as normal

Maybe not “Lemmy” us. But the folks who went hog wild during The Fappening, combined with younger people who are coming into contact with pornography for the first time, make a ripe base of users who will consider this the new normal.