I upgraded last year from i7-4700k to i7-12700k and from GTX 750Ti to RTX 3060Ti, because 8 threads and 2GB of vram was finally not enough for modern games. And my old machine still runs as a home server.

The jump was huge and I hope I’ll have money to upgrade sooner this time, but if needed I can totally see that my current machine will work just fine in 6-8 years.

My wife is on a 1070 and I am on a 2070 both on 1440p her fave game is 7d2d runs perfect.

7d2d

Just a heads up that this isn’t an acronym people know. I consider myself knowledgeable about gaming, and I had to look it up.

I’m still pushing a ten year old PC with an FX-8350 and a 1060. Works fine.

Fine for what? Youtube? That cpu had poor performance even when it was released.

Lol what? No it didn’t. It just runs really hot.

I didn’t think of my computer as old until I saw your comment with ten years and it’s gpu in the same sentence. When did that happen??

We reached the physical limits of silicon transistors. Speed is determined by transistor size (to a first approximation) and we just can’t make them any smaller without running into problems we’re essentially unable to solve thanks to physics. The next time computers get faster will involve some sort of fundamental material or architecture change. We’ve actually made fundamental changes to chip design a couple of times already, but they were “hidden” by the smooth improvement in speed/power/efficiency that they slotted into at the time.

My 4 year old work laptop had a quad core CPU. The replacement laptop issued to me this year has a 20-core cpu. The architecture change has already happened.

I’m not sure that’s really the sort of architectural change that was intended. It’s not fundamentally altering the chips in a way that makes them more powerful, just packing more in the system to raise its overall capabilities. It’s like claiming you had found a new way to make a bulletproof vest twice as effective, by doubling the thickness of the material, when I think the original comment is talking about something more akin to something like finding a new base material or altering the weave/physical construction to make it weigh less, while providing the same stopping power, which is quite a different challenge.

Except the 20 core laptop I have draws the same wattage as the previous one, so to go back to your bulletproof vest analogy, it’s like doubling the stopping power by adding more plates, except the all the new plates weigh the same as and take up the same space as all the old plates.

A lot of the efficiency gains in the last few years are from better chip design in the sense that they’re improving their on-chip algorithms and improving how to CPU decides to cut power to various components. The easy example is to look at how much more power efficient an ARM-based processor is compared to an equivalent x86-based processor. The fundamental set of processes designed into the chip are based on those instruction set standards (ARM vs x86) and that in and of itself contributes to power efficiency. I believe RISC-V is also supposed to be a more efficient instruction set.

Since the speed of the processor is limited by how far the electrons have to travel, miniaturization is really the key to single-core processor speed. There has still been some recent success in miniaturizing the chip’s physical components, but not much. The current generation of CPUs have to deal with errors caused by quantum tunneling, and the smaller you make them, the worse it gets. It’s been a while since I’ve learned about chip design, but I do know that we’ll have to make a fundamental chip “construction” change if we want faster single-core speeds. E.G. at one point, power was delivered to the chip components on the same plane as the chip itself, but that was running into density and power (thermal?) limits, so someone invented backside power delivery and chips kept on getting smaller. These days, the smallest features on a chip are maybe 4 dozen atoms wide.

I should also say, there’s not the same kind of pressure to get single-core speeds higher and higher like there used to be. These days, pretty much any chip can run fast enough to handle most users’ needs without issue. There’s only so many operations per second needed to run a web browser.

i am also using ~10 year old pc but mine is kinda lower end compared to yours

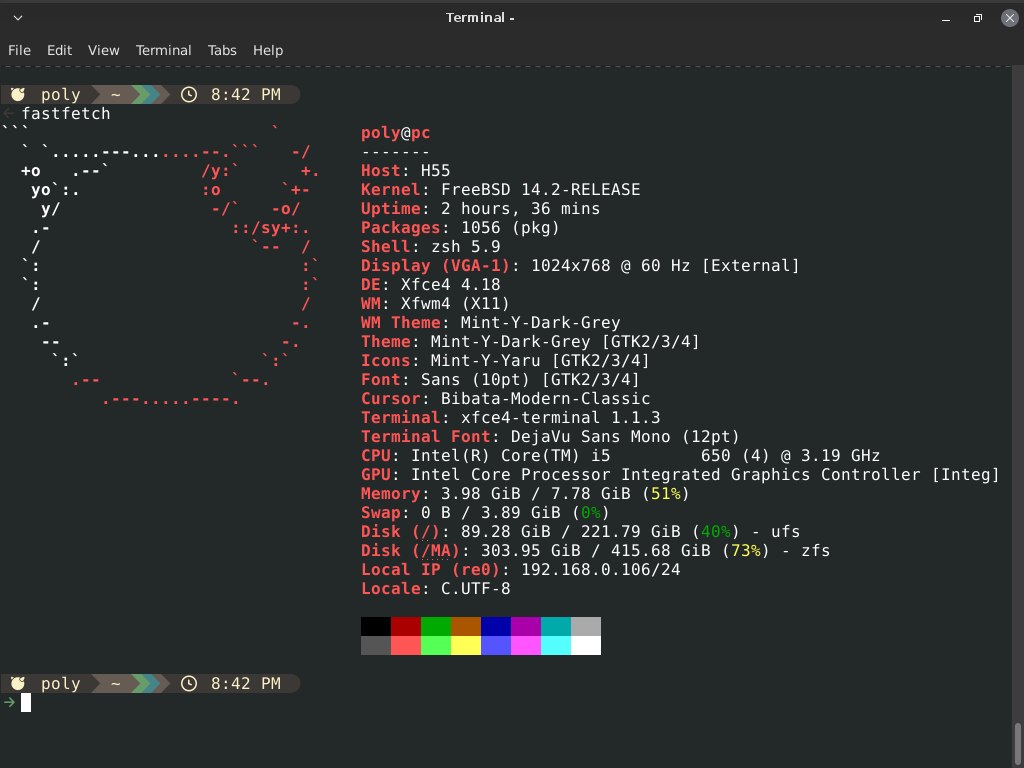

i am also using ~10 year old pc but mine is kinda lower end compared to yoursGenuine curiosity… Why BSD?

Also… There were significant improvements with intel Sandy bridge (2xxx series) and parent is using an equivalent to that. Sandy+ (op seems to be haswell or ivy bridge) is truly the mark of -does everything-… I’ve only bothered to upgrade because of CPU hungry sim games that eat cores.

https://unixdigest.com/articles/technical-reasons-to-choose-freebsd-over-linux.html

mine is clarkdale btw just fyi

besides that linux just doesn’t support my hardware, in all the distros in all the kernels heck even live arch iso there is this weird issue where the pc randomly freezes with those weird screen glitches randomly and the only option to make it work again is force hard reboot

besides that linux just doesn’t support my hardware, in all the distros in all the kernels heck even live arch iso there is this weird issue where the pc randomly freezes with those weird screen glitches randomly and the only option to make it work again is force hard reboot

Same here!

My trusty backup is still an FX8320, the main is an I7-8700k with 1070ti

For me the most important reason to upgrade things is security updates. E.g. if you have an old smartphone it might not get security updates anymore.

Some people don’t seem to care, but I get paranoid about hackers breaking into my phone in some way.

Phones suffer a lot from forced obsolescence. More often than not, the hardware is fine, but the OEM abandons it because “lol fuck you, buy new shit”. Anyone that says that a Samsung S7 “can’t handle current apps” is out of their mind

Other than camera and software, there’s hardly any reason to buy new phones over flagships from some years ago.

This. My mobile is over 6 years old. Security updates till 2022, but I don’t even mind sec updates. What concerns me more is buy-a-new-phone-every-year-because-reasons, because buy new shit and spybloatware. Skynet is the virus. My old one runs perfectly fine and I buy a new one if it is broken. Even critical apps like banking doing fine. It’s not like the whole architecture of the OS changes yearly, right?

Ugh, my banking app doesn’t work on my phone any more because it’s “old”. It came out in 2017.

there is no way in hell a 2014 computer is able to run modern games on medium settings at all, let alone running well. my four year old computer (Ryzen 5 4000, GTX 1650, 16 GB RAM) can barely get 30-40 fps on most modern games at 1080p even on the absolute lowest settings. don’t get me wrong, it should still work fine. however, almost no modern games are optimized at all and the “low” settings are all super fucking high now, so anon is lying out of his ass.

It says the story took place in 2020. And that it played “Most games” on medium settings. 30-40 fps is playable to a lot of people. I’m inclined to believe them.

I could say I still run my 2014 (or 15, I don’t remember) PC, but it’s Ship of Theseus’d at this point, the only OG parts left are the CPU, PSU, case, and mobo.

I had an i5-2500k from when they came out (I think 2011? Around that era) until 2020 - overclocked to 4.5Ghz, ran solid the whole time. Upgraded graphics card, drives, memory, etc. but that was incremental as needed. Now on an i7-10700k. The other PC has been sat on the side and may become my daughters or wife’s at some point.

Get what you need, and incremental upgrades work.

I was rocking a i7-4790k and a GTX970 until about 2 years ago, now I’m rocking a i5-10400F and one of Nvidia’s chip shortage era RTX2060s. My wife is still on a i5-4560 (by memory) and a RX560 and that’s really getting long in the tooth with only 4 threads and the budget GPU doesn’t help matters much.

Later this year when Windows 10 gets closer to EOL I figure I’ll refresh her machine and upgrade the SSD in mine

I just installed Linux on my old 2500k @ 4.5GHz system a few days ago! I haven’t actually done much with it yet because I also upgraded the OS on a newer system that is taking over server responsibilities. But you are correct on the year with 2011. I built mine to coincide with the original release of Skyrim.

The install went quickly (Linux Mint, so as expected) and the resulting system is snappy yet full featured. It’s ready for another decade of use. Maybe it will be a starter desktop to start teaching my second grader with it. (Educational stuff as well as trying a mouse for games compared with a controller)

I got screwed over with the motherboard, as it had to go back because of bimetallic contracts in the SATA ports that could wear out and stop it working so there was a big recall of all the boards… Was an amazing system though and if I hadn’t seen the computer I’m currently running for an absolute steal, I’d probably still be running it with a 3060 as a pretty potent machine still.

Of course, then I’d never have the experience of just HOW FAST NVME IS! :-D

Hey, mine is from 2014 too! runs linux and is fast enough for minecraft at 30fps and the sims 4.

I’ve been rocking a 1080ti since launch. Upgraded my 4th gen i7 to a 9th gen i9 on a sale a few years back. SSD upgraded when I got some that were going to be recycled.

Eventually I want to move to team red for linux compatibility. Other than that, I am sticking with what I have. (Doesn’t help that I have 2 small children that all my money goes to. )

4770/1060 gang over here. Upgrading to a free 9600 this weekend.

I genuinely dont understand this. On time my friend bought an rtx 3060 (was using rx580).

I asked “oh cool, whay new games are you gonna play?”. She said “none, I’m just gonna play the same ones”. I asked “what was wrong with the old card?” And she said “idk just felt like I need a new one.” We play games like tf2…

I just don’t get this type of behaviour. She also has like 14 pairs of sneakers.

My i5 3450 is really showing its limits, but I’m broke as fuck 🤷

My PC was made in 2014 and i upgraded it but it died in 2022 due to mishandling. If you keep your PC clean and don’t move it it can last even longer!

I’m still using the i7 I built up back in 2017 or so… Upgraded to SSD some years ago, will be upping the ram to 64gigs (max the mb can handle) in a few days when it arrives…

i just upgraded this year, to an r9 5900x, from my old r5 2600, still running a 1070 though.

I do video editing and more generally CPU intensive stuff on the side, as well as a lot of multitasking, so it’s worth the money, in the long run at least.

I also mostly play minecraft, and factorio, so.

ryzen 5000 is a great upgrade path for those who don’t want to buy into am5 yet. Very affordable. 7000 is not worth the money, unless you get a good deal, same for 9000, though you could justify it with a new motherboard and ram.

I’m rocking a 5800X and see no reason to go to 7000 or no 9000 anytime soon. It’s been great since I built the PC.